The Internet Archive recently announced an apparent change of policy concerning the collection of web sites for their long-term preservation effort:

- Robots.txt meant for search engines don’t work well for web archives

- Archivierung des Internets: Internet Archive ignoriert künftig robots.txt

Before this announcement, it was commonly believed that you could ask the Internet Archive not to make copies of a site by adding a statement to the site’s robots.txt file, which would be honored.

The announcement, posted April 17, 2017, reads in part:

A few months ago we stopped referring to robots.txt files on U.S. government and military web sites for both crawling and displaying web pages (though we respond to removal requests sent to info@archive.org). As we have moved towards broader access it has not caused problems, which we take as a good sign. We are now looking to do this more broadly.

However, as I had already noted on Stack Exchange in March 2017, robots.txt had not been fully honored for at least 10 years:

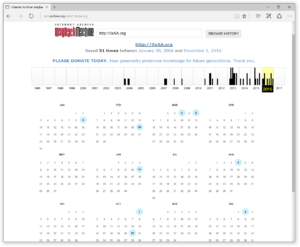

I just did a quick test, commenting out the ia_archiver Disallow entry for a site that had it for at least the past 10 years. Then I looked the site up on archive.org/web, and it showed up grabs it had collected in 2007, 2008, 2009, 2011, 2012, 2013, 2014, 2015, 2016 and 2017! This means that Archive.org never strictly honored what others thought to be a “do not archive” statement during these years, it was merely not exposing the archived copies.

The web site for which I sacrificed the robots.txt continuity was 0xAA.org (an event we used to organize), and here is a screenshot taken during the test:

The original robots.txt file was reinstated after the test, again leading to the familiar “Page cannot be displayed due to robots.txt.” message. The overview also shows captures from before 2007, and the copies saved by the Internet Archive also show the exclusion rule to be present already in 2003. However I did not have the backups at hand to double-check the change history of that older period.

When I originally found this out, I thought that this was interesting to know, but not necessarily newsworthy. However, the blog post that followed seemed to express an official change in direction that gave me second thoughts. While robots.txt is not an official, compulsory declaration of permission of non-permission, neither is the email takedown request mechanism which is proposed as an alternative. I believe in the importance of asking before doing, and it felt like robots.txt was a good element to maintain a balance of acceptance. If the Internet Archive was in a legal gray area with its saving and making available of copyrighted works, could things get worse once it stops respecting robots.txt?

I am a big admirer of the Internet Archive. I can’t imagine the loss of the early web of the 1990s, which they so preciously worked to preserve. I visited them several times, I have friends working there, and I would entrust them with some of our own works, if something happened to us.

Having said that, does the public not deserve full disclosure and transparency, rather than what may be seen as a careful exercise in obfuscation? “A few months” (limited to “U.S. government and military web sites”) and “10 years” (on all sites?) are not the same thing. We are reassured that this new data harvesting “has not caused problems”. But what about the “right to be forgotten“?

Individual users can already now collect screen grabs (like I did), or save pages, or print them. But we have learned that our traditional rules don’t always scale to what becomes possible in a massively automated new world order.

These web grabs, like truth itself, may be helpful, or haunting. What if a version containing a tragic error were to be preserved against the will of the publisher? What about our juvenile mistakes? How long until somebody requests these “few months” (10+ years) for court use with a simple subpoena?

I do not oppose preserving the public web for posterity, even against the will of the original content publishers. I cited some more difficult test cases before, in what I find a fascinating voyage between “free will” and “encrypted mind”. However, I am concerned about making that material available earlier, in a way that goes against the free choice of individuals who may have something to say about that content, and about this not being disclosed with the transparency and debate it probably deserves.

Some thoughts on how this could be improved:

- Any organization like the Internet Archive should enjoy the privileges and responsibilities that come with Library status, including special powers to archive works while they are still protected by copyright, as well being protected under laws which would otherwise prohibit the circumvention of access-control measures. This could include not just web content, but also software (including copy-protected games) and other digital content. I am aware of precedents, e.g. National Libraries in some states of the former Yugoslavia, where it became necessary for each to individually preserve the works of a fragmenting country.

- Robots.txt and/or the <meta> tag could be extended to separately express consent to long-term preservation and consent to dissemination of cached versions during the copyright term (or another shorter period, which could be specified). Adhering to this might not be a universal requirement, but at least the original intention could be taken into account later.